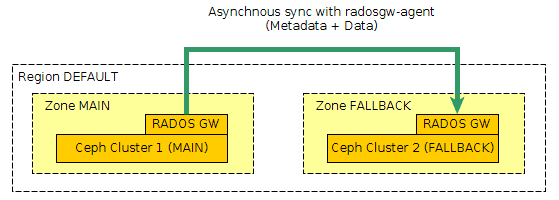

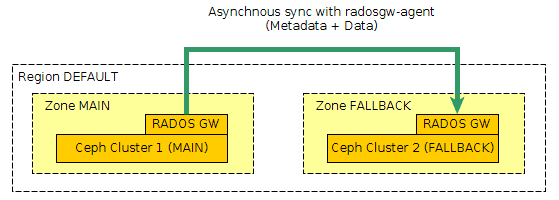

This is a simple example of federated gateways config to make an asynchonous replication between two Ceph clusters.

( This configuration is based on Ceph documentation : http://ceph.com/docs/master/radosgw/federated-config/ )

Here I use only one region (“default”) and two zones (“main” and “fallback”), one for each cluster.

Note that in this example, I use 3 placement targets (default, hot, cold) that correspond respectively on pool .main.rgw.buckets, .main.rgw.hot.buckets, .main.rgw.cold.buckets. Be carefull to replace the tags {MAIN_USER_ACCESS}, {MAIN_USER_SECRET}, {FALLBACK_USER_ACESS}, {FALLBACK_USER_SECRET} by corresponding values.

First I created region and zones files, that will be require on the 2 clusters :

The region file “region.conf.json” :

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| { "name": "default",

"api_name": "default",

"is_master": "true",

"endpoints": [

"http:\/\/s3.mydomain.com:80\/"],

"master_zone": "main",

"zones": [

{ "name": "main",

"endpoints": [

"http:\/\/s3.mydomain.com:80\/"],

"log_meta": "true",

"log_data": "true"},

{ "name": "fallback",

"endpoints": [

"http:\/\/s3-fallback.mydomain.com:80\/"],

"log_meta": "true",

"log_data": "true"}],

"placement_targets": [

{ "name": "default-placement",

"tags": []},

{ "name": "cold-placement",

"tags": []},

{ "name": "hot-placement",

"tags": []}],

"default_placement": "default-placement"}

|

a zone file “zone-main.conf.json” :

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

| { "domain_root": ".main.domain.rgw",

"control_pool": ".main.rgw.control",

"gc_pool": ".main.rgw.gc",

"log_pool": ".main.log",

"intent_log_pool": ".main.intent-log",

"usage_log_pool": ".main.usage",

"user_keys_pool": ".main.users",

"user_email_pool": ".main.users.email",

"user_swift_pool": ".main.users.swift",

"user_uid_pool": ".main.users.uid",

"system_key": {

"access_key": "{MAIN_USER_ACCESS}",

"secret_key": "{MAIN_USER_SECRET}"},

"placement_pools": [

{ "key": "default-placement",

"val": { "index_pool": ".main.rgw.buckets.index",

"data_pool": ".main.rgw.buckets",

"data_extra_pool": ".main.rgw.buckets.extra"}},

{ "key": "cold-placement",

"val": { "index_pool": ".main.rgw.buckets.index",

"data_pool": ".main.rgw.cold.buckets",

"data_extra_pool": ".main.rgw.buckets.extra"}},

{ "key": "hot-placement",

"val": { "index_pool": ".main.rgw.buckets.index",

"data_pool": ".main.rgw.hot.buckets",

"data_extra_pool": ".main.rgw.buckets.extra"}}]}

|

And a zone file “zone-fallback.conf.json” :

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

| { "domain_root": ".fallback.domain.rgw",

"control_pool": ".fallback.rgw.control",

"gc_pool": ".fallback.rgw.gc",

"log_pool": ".fallback.log",

"intent_log_pool": ".fallback.intent-log",

"usage_log_pool": ".fallback.usage",

"user_keys_pool": ".fallback.users",

"user_email_pool": ".fallback.users.email",

"user_swift_pool": ".fallback.users.swift",

"user_uid_pool": ".fallback.users.uid",

"system_key": {

"access_key": "{FALLBACK_USER_ACESS}",

"secret_key": "{FALLBACK_USER_SECRET}"

},

"placement_pools": [

{ "key": "default-placement",

"val": { "index_pool": ".fallback.rgw.buckets.index",

"data_pool": ".fallback.rgw.buckets",

"data_extra_pool": ".fallback.rgw.buckets.extra"}},

{ "key": "cold-placement",

"val": { "index_pool": ".fallback.rgw.buckets.index",

"data_pool": ".fallback.rgw.cold.buckets",

"data_extra_pool": ".fallback.rgw.buckets.extra"}},

{ "key": "hot-placement",

"val": { "index_pool": ".fallback.rgw.buckets.index",

"data_pool": ".fallback.rgw.hot.buckets",

"data_extra_pool": ".fallback.rgw.buckets.extra"}}]}

|

On first cluster (MAIN) ¶

I created the pools :

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| ceph osd pool create .rgw.root 16 16

ceph osd pool create .main.rgw.root 16 16

ceph osd pool create .main.domain.rgw 16 16

ceph osd pool create .main.rgw.control 16 16

ceph osd pool create .main.rgw.gc 16 16

ceph osd pool create .main.rgw.buckets 512 512

ceph osd pool create .main.rgw.hot.buckets 512 512

ceph osd pool create .main.rgw.cold.buckets 512 512

ceph osd pool create .main.rgw.buckets.index 32 32

ceph osd pool create .main.rgw.buckets.extra 16 16

ceph osd pool create .main.log 16 16

ceph osd pool create .main.intent-log 16 16

ceph osd pool create .main.usage 16 16

ceph osd pool create .main.users 16 16

ceph osd pool create .main.users.email 16 16

ceph osd pool create .main.users.swift 16 16

ceph osd pool create .main.users.uid 16 16

|

I configured region, zone, and add system users :

1

2

3

4

5

6

7

| radosgw-admin region set --name client.radosgw.main < region.conf.json

radosgw-admin zone set --rgw-zone=main --name client.radosgw.main < zone-main.conf.json

radosgw-admin zone set --rgw-zone=fallback --name client.radosgw.main < zone-fallback.conf.json

radosgw-admin regionmap update --name client.radosgw.main

radosgw-admin user create --uid="main" --display-name="Zone main" --name client.radosgw.main --system --access-key={MAIN_USER_ACCESS} --secret={MAIN_USER_SECRET}

radosgw-admin user create --uid="fallback" --display-name="Zone fallback" --name client.radosgw.main --system --access-key={FALLBACK_USER_ACESS} --secret={FALLBACK_USER_SECRET}

|

Setup RadosGW Config in ceph.conf on cluster MAIN :

1

2

3

4

5

6

7

8

9

10

| [client.radosgw.main]

host = ceph-main-radosgw-01

rgw region = default

rgw region root pool = .rgw.root

rgw zone = main

rgw zone root pool = .main.rgw.root

rgw frontends = "civetweb port=80"

rgw dns name = s3.mydomain.com

keyring = /etc/ceph/ceph.client.radosgw.keyring

rgw_socket_path = /var/run/ceph/radosgw.sock

|

I needed to create keyring for [client.radosgw.main] in /etc/ceph/ceph.client.radosgw.keyring, see documentation.

Then, start/restart radosgw for cluster MAIN.

On the other Ceph cluster (FALLBACK) ¶

I created the pools :

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

| ceph osd pool create .rgw.root 16 16

ceph osd pool create .fallback.rgw.root 16 16

ceph osd pool create .fallback.domain.rgw 16 16

ceph osd pool create .fallback.rgw.control 16 16

ceph osd pool create .fallback.rgw.gc 16 16

ceph osd pool create .fallback.rgw.buckets 512 512

ceph osd pool create .fallback.rgw.hot.buckets 512 512

ceph osd pool create .fallback.rgw.cold.buckets 512 512

ceph osd pool create .fallback.rgw.buckets.index 32 32

ceph osd pool create .fallback.rgw.buckets.extra 16 16

ceph osd pool create .fallback.log 16 16

ceph osd pool create .fallback.intent-log 16 16

ceph osd pool create .fallback.usage 16 16

ceph osd pool create .fallback.users 16 16

ceph osd pool create .fallback.users.email 16 16

ceph osd pool create .fallback.users.swift 16 16

ceph osd pool create .fallback.users.uid 16 16

|

I configured region, zone, and add system users :

radosgw-admin region set --name client.radosgw.fallback < region.conf.json

radosgw-admin zone set --rgw-zone=fallback --name client.radosgw.fallback < zone-fallback.conf.json

radosgw-admin zone set --rgw-zone=main --name client.radosgw.fallback < zone-main.conf.json

radosgw-admin regionmap update --name client.radosgw.fallback

radosgw-admin user create --uid="fallback" --display-name="Zone fallback" --name client.radosgw.fallback --system --access-key={FALLBACK_USER_ACESS} --secret={FALLBACK_USER_SECRET}

radosgw-admin user create --uid="main" --display-name="Zone main" --name client.radosgw.fallback --system --access-key={MAIN_USER_ACCESS} --secret={MAIN_USER_SECRET}

Setup RadosGW Config in ceph.conf on cluster FALLBACK :

1

2

3

4

5

6

7

8

9

10

| [client.radosgw.fallback]

host = ceph-fallback-radosgw-01

rgw region = default

rgw region root pool = .rgw.root

rgw zone = fallback

rgw zone root pool = .fallback.rgw.root

rgw frontends = "civetweb port=80"

rgw dns name = s3-fallback.mydomain.com

keyring = /etc/ceph/ceph.client.radosgw.keyring

rgw_socket_path = /var/run/ceph/radosgw.sock

|

Also, I needed to create keyring for [client.radosgw.fallback] in /etc/ceph/ceph.client.radosgw.keyring and start radosgw for cluster FALLBACK.

Finally setup the RadosGW Agent ¶

/etc/ceph/radosgw-agent/default.conf :

1

2

3

4

5

6

7

8

9

| src_zone: main

source: http://s3.mydomain.com:80

src_access_key: {MAIN_USER_ACCESS}

src_secret_key: {MAIN_USER_SECRET}

dest_zone: fallback

destination: http://s3-fallback.mydomain.com:80

dest_access_key: {FALLBACK_USER_ACESS}

dest_secret_key: {FALLBACK_USER_SECRET}

log_file: /var/log/radosgw/radosgw-sync.log

|

1

| /etc/init.d/radosgw-agent start

|

After that, he still has a little suspense … Then I try to create a bucket with data on s3.mydomain.com and verify that, it’s well synchronized.

for debug, you can enable logs on the RadosGW on each side, and start radosgw-agent with radosgw-agent -v -c /etc/ceph/radosgw-agent/default.conf

These steps work for me. The establishment is sometimes not obvious. Whenever I setup a sync it rarely works the first time, but it always ends up running.